Companies are not properly protecting minors

X announced that it would hire a hundred moderators, and a bitter smile came to my face. It is a step forward from the dismissal of the entire content moderation team, which took place just over a year ago at Musk’s decision to break ties with previous Twitter management and demonstrate his desire for free speech, one of the many slogans repeated in the months leading up to the acquisition.

Pushing for free speech was not a trump card, as the platform became filled with misinformation, propaganda, and deepfakes, driving away or greatly reducing interactions by those who had always used social media to spread verified, quality content. In addition to moderators, X said it would create a Trust and Safety Center in Austin, Texas, which will primarily deal with content related to Child Sexual Exploitation, specifically trying to quell the traffic of child porn images and the opening of erotically oriented profiles of minors.

While appreciating X’s change of direction, the first question that needs to be pondered concerns the number of moderators to be put under contract. As skilful and effective as they are, a hundred people are forced throughout the day every day to scour the platform and block those who violate the policy, then having to delve into who is behind each account and what kind of violations they have done previously, seems a number far below sufficient.

Harmful work – social media

In recent years, there have been many public testimonies from former moderators, especially from Meta, which, having more monthly active users than any other, is the company that hires the most moderators, albeit often through subcontracts with local companies that manage the contract terms of employees. These are tough narratives to read, with people forced to watch a huge amount of violent or inappropriate videos shared on Facebook and Instagram, the daily repetition of which leaves serious mental health dross. It is no coincidence that a substantial proportion of these employees leave their jobs after a few months, while many others have filed complaints against the company, guilty of failing to provide adequate psychological support to alleviate the severe stress caused by this type of work.

A typical case that explains the dynamics in question is what happened in recent months in the office of CCC Barcelona Digital Services, a company owned by Telsus, a company to which Meta entrusted the control of the content of its platforms in Spain. A task that required viewing about 300-500 videos a day for an annual salary of 24,000 euros. In theory, a good offer compared to the Spanish average wage; in practice, after a few months, 400 employees (20% of the workforce) went on sick leave due to psychological trauma resulting from viewing clips showing the worst side of human beings: murders, rapes, live suicides.

“Social media is ruining our children”

The numbers are different across platforms, but the process is almost identical. There are too few people called upon to manage a huge amount of content. The easiest solution, at least on paper, is for companies to invest more resources to protect their employees from the drifts of their own platforms. The harms related to the psychological sphere apply to users as well, as evidenced by the long series of precedents involving minors in particular. “Social networks are a public health hazard; we must not allow Big Tech to endanger our children. Companies like TikTok, YouTube, Facebook are fueling a mental health crisis by designing their platform with dangerous and addicting features. We cannot stand by and let big tech monetize our children’s privacy and endanger their mental health.”

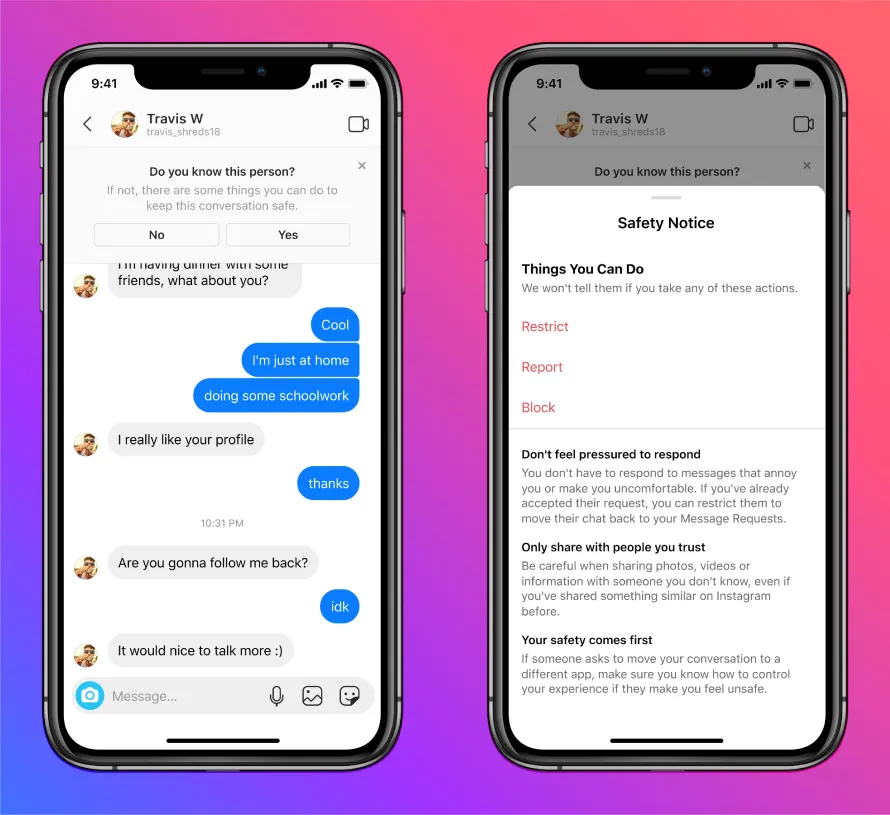

Harsh words uttered by Eric Adams, mayor of New York City, the first city to call social platforms an ‘environmental toxin’ in a public notice. This is not an isolated outcry; indeed, in recent months, 42 U.S. states have denounced Meta for using addictive features dedicated to children and adolescents, lengthening the average time underage users spend on the platforms and consequently also the pitfalls for what they read and see, as well as the interactions they may have with potentially dangerous adults, without having or knowing the necessary defence tools.

More money is needed for efficient solutions

Cooler still is the most critical moment of Mark Zuckerberg, the person who most symbolizes the age of social media. “You have blood on your hands,” said Senator Lindsey Graham toward the group consisting of Linda Yaccarino (X), Evan Spiegel (Snapchat), Jason Citron (Discord), Shou Chew (TikTok) and the Facebook founder himself. The reference during the hearing with the U.S. Senate Judiciary Committee was precise to the platforms’ behaviour on child safety, with a series of criticisms that forced Zuckerberg to apologize to parents and relatives of the victims. “I’m sorry for what you went through; no one should have to suffer what you suffered.”

As is often the case, however, words were not followed by deeds, as only X and Snap said they agreed with the Kids Online Safety Act. This bill calls for more efforts from companies to prevent bullying, sexual harassment, and the mental health of younger users. Stalled in the Senate since 2022, the measure has been rejected by Meta, Discord and TikTok as being too restrictive. The summary is always the same: lots of talk and less concrete facts. This is the trend that Meta and all other social companies need to change.