OpenAI’s new chatbot ChatGPT took the world by storm shortly after its release in November 2022. The chatbot reached one million users within the first five days, providing informative, intelligent answers to various questions and prompts. With the chatbot’s impressive answers outperforming many existing chatbots, some wonder how this tool will contribute to our productivity. Moreover, some people expect the tool to take their jobs, from marketing to journalism.

There also was a case of ChatGPT being hired and fired for news production in recent months. An Argentine TV channel, “TN”, had ChatGPT write a crime report of a woman being robbed by a man in Buenos Aires, Argentina. According to the company, the article looked “okay” format-wise. However, the chatbot had many facts wrong, such as the victim’s age and name, whether the culprit was identified, and even fabricated a quotation.

News Reporting Performance

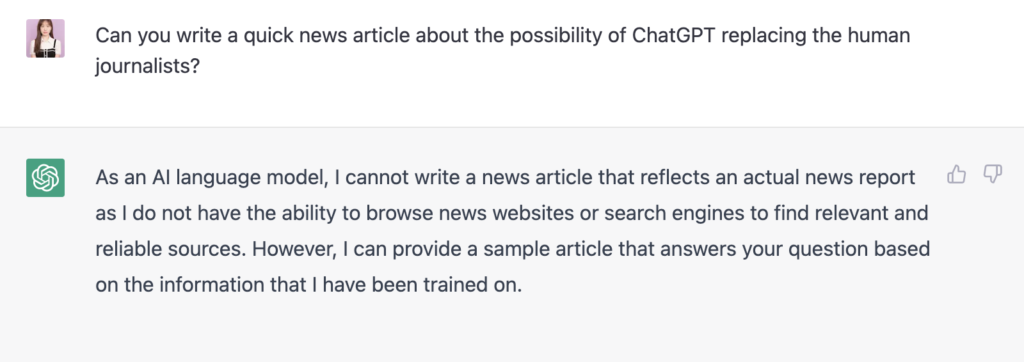

ChatGPT is only sometimes giving you the correct answer. A chatbot is still in development; its information may not be 100% accurate depending on the details and depth of your questions. I made ChatGPT write a simple article about the tool to test the chatbot’s fact-checking and news-reporting performance. I asked the chatbot if it could write an article about the possibility of ChatGPT replacing journalists’ jobs. This means it may bear the risk of misinformation and disinformation without a human professional’s supervision.

Although the chatbot admitted that it could not “reflect an actual news report as it cannot browse news websites or search engines to find relevant and reliable sources”, it could provide a sample article based on what it was trained on.

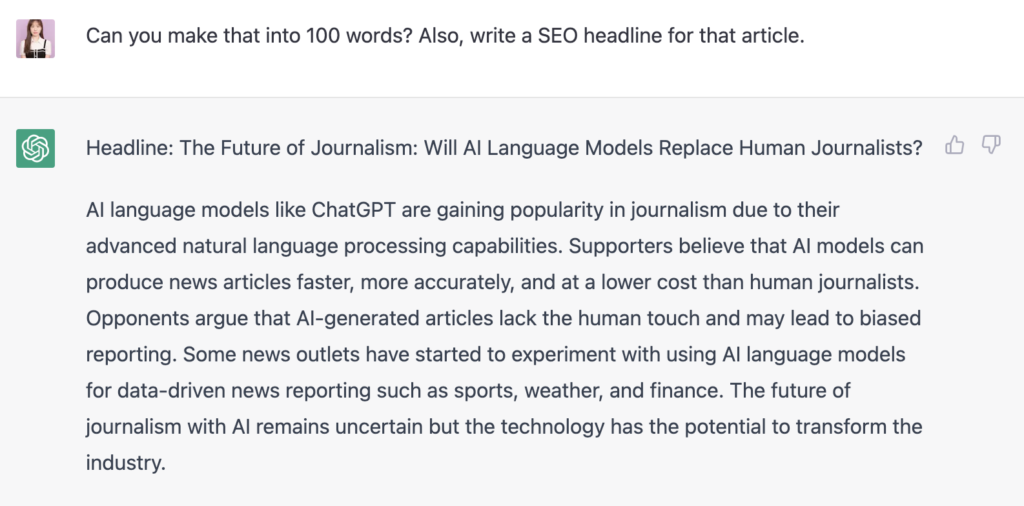

Within seconds, the tool returned a 250-word-long article about the topic. It briefly introduced the tool, the fact that the topic itself has been gaining some attention recently, and opinions from the tool’s supporters and opponents. It also concluded that only “time can answer” if AI language models can take human journalists’ jobs. It tried to keep its balance on the tool’s performance in writing and had both sides’ opinions included. The article it drafted within seconds is indeed impressive.

As the tool admitted, this wouldn’t be a good news article as it is not based on facts or researched studies with its lacking ability to browse sources. It reads like an essay with opinions, which would have been enough for a class assignment.

I then asked the tool to summarise what it had written into 100 words, which news agencies often ask for in their publications. The tool had no problem producing a shortened version of the article and a cool search-optimised headline.

This shows how good the tool is technology-wise with its ability to write articles in asked templates and formats in seconds. It also gives ideas or inspiration to writers who are stuck.

However, the question of source collection remains. ChatGPT says that it has yet to have a preferred source when it comes to data collection and heavily depends on the information provided by the users, including news sources.

With no clear identification of which sources it is using, possibly from the list of sources being too long and big, the tool may incorporate fake information from unsolicited sources in its answers, which may be critical to fact-based news reporting. Some reports expect ChatGPT to render soon news agencies and search engines obsolete with its remarkable performances. Would the tool become as good as humans, capturing the human-like nuances and producing unbiased, fact-based articles? As the tool says, only time will tell.