The AI Act approved by the European Union is the first law in the world to regulate artificial intelligence. The agreement on the rules covering the use of AI technologies came after close negotiations lasting over 30 hours between the Commission, Parliament and the European Council. Representatives of the 27 states of the Union approved an unparalleled text, confirming how Europe is at the forefront of efforts to direct the use of new technologies. This is a step that ties in with what has been done previously with the General Data Protection Regulation on privacy and, more recently, with the Digital Services Act aimed at the companies of the main digital platforms.

The AI Act lays the foundations for the call for a common regulatory and legal framework for AI, advanced by the European Commission in 2021. It comes at the culmination of a long work period due to the rapid development of GenAI, which, together with the use of biometric identification tools, especially facial recognition, has created divergences between member states.

AI Act in Europe

For European institutions, the AI Act is a regulation aimed at ensuring that fundamental rights, democracy, the rule of law and environmental sustainability are protected from high-risk AI while stimulating innovation and making Europe a leader in the field. The rules set obligations for AI based on its potential risks and level of impact’.

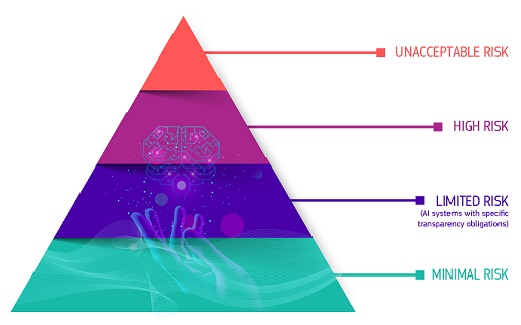

In this sense, European law evaluates AI technologies and their practical applications differently, weighing them against risk. Thus, there are technologies with minimal risk, others with high risk, and technologies defined with unacceptable risk and limited risk, with specific

transparency obligations. The basic level includes many AI systems, such as spam filters, which will not be subject to obligations because they do not threaten citizens’ rights and security.

Chatbots and deepfakes

The limited risk with specific transparency obligations concerns systems such as chatbots, the operation and functioning of which must be clearly communicated to users, who are aware that they are interacting with an artificial and not a natural being. This means deepfakes and similar manipulations must be presented as such.

As the definition indicates, technologies characterised by unacceptable risk are systems that go against fundamental human rights and, as such, will be banned. The list includes applications that manipulate human behaviour to deceive users, various predictive policing applications, and certain uses of biometric systems, such as the use of emotion recognition systems in the workplace.

The picture changes and becomes more nuanced when the risk becomes high, involving AI systems used in critical infrastructures, such as those concerning the management of gas, electricity and water, medical devices and access to educational institutions or recruitment of personnel. This group also includes all those systems used in the areas of law and its applications.

Biometric identification systems

High risk is the wording that also applies to biometric identification systems, which, as in the other cases, requires companies and any other entity wishing to use them to comply with a number of conditions, such as risk mitigation, logging of activities, provision of clear information to users, accuracy and IT security.

The use or prohibition of in certain circumstances and for law enforcement purposes of facial recognition was the main obstacle to the approval of the regulation because, on the one hand, the European Parliament was aiming at a total blockade; on the other hand, the European Council as expressed by the states was hoping for a more permissive approach.

Exploiting real-time facial recognition and algorithms that provide probabilities of who and where a crime may be committed were options that countries such as Hungary, Italy and France were pushing for, with the latter also especially interested in the issue in anticipation of the Paris Olympic Games scheduled for the summer of 2024, an event for which it has already planned extensive use of AI systems for security. In the end, biometric recognition was banned with some exceptions: situations of foreseen and evident threat, such as terrorist attacks searches for people suspected of the most serious crimes.

The agreement reached in Brussels will have to be formally approved by the European Parliament and Council and then enter into force 20 days after publication in the Official Journal. However, the AI Art will be applied two days after its entry into force, as this is the transitional period chosen to allow all parties to implement the changes necessary to comply with the new rules.

Companies that do not comply will face penalties ranging from fines of €35 million or 7% of global annual turnover if the violation concerns prohibited AI systems. It goes down to €15 million or 3% of turnover for those who do not comply with the other obligations, while €7.5 million or 1.5% of turnover is the fine for those who do not provide correct information. It should be noted, however, that the figures will be scaled down in the case of startups and small and medium-sized enterprises.