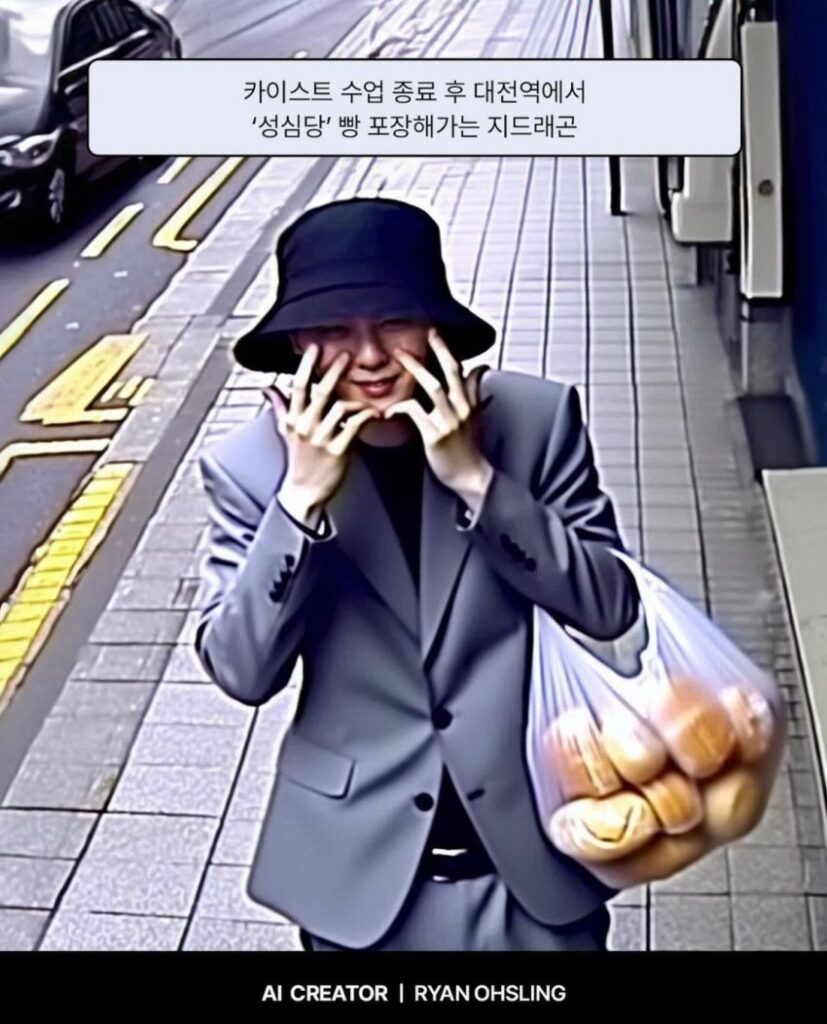

Gen AI: In June 2024, an artificial intelligence-generated picture of the well-known K-pop star G-Dragon holding a transparent bag of bread while making peace signs with his hands went viral on X in South Korea.

The popular post on X showed an image with the caption “G-Dragon returning home after buying bread from Sungsimdang at Daejeon station, following his classes at KAIST”, and the AI creator’s name watermarked at the bottom.

The image was produced after the viral news of the celebrity accepting his position as a visiting professor of the mechanical engineering department at Korea Advanced Institute of Science and Technology, which is located in Daejeon. Paired with his apparent splurge at the famous local bakery chain in the photo, the AI-generated picture quickly garnered views across different social media platforms. On X alone, it recorded 2.8 million views, with many believing the picture to be real, quoting the post and commenting on how happy he seems.

The picture soon grabbed the attention of Korean media as well, clarifying that it is an AI creation, not a real picture. Outlets reported that the original creator of this AI image was RYAN OHSLING, who makes fake images based on requested commissions on Instagram and Pinterest.

With the image spreading faster than the news, recently updated comments are still about how shocked users are to learn it is made with AI. Some users suggested that this shows how easily AI can deceive people online, even when a watermark states the creator’s name.

4i Magazine reached out to the creator to ask about the reasons behind his production but did not receive a reply.

Difficulty of Telling What’s Fake and Real

Hyper-realistic gen AI productions have been causing a stir outside of South Korea, too.

One of the biggest examples of controversy is the video where Ukraine’s President Volodymyr Zelensky conceded defeat to Russia, which spread mainly on YouTube and Facebook. Shortly after, a video of President Vladimir Putin of Russia declaring peace was uploaded on YouTube. The videos were found to be fake AI productions and were promptly deleted by YouTube. However, some of the clips can be still found across social media.

Productions assisted by gen AI can potentially undermine democracy, with people not being able to discern between fake and real content.

Professors Sarah Kreps and Doug Kriner did a field experiment of sending randomised advocacy letters composed by humans and GPT-3 to 7,200 legislators for their study “How AI Threatens Democracy” in 2023. The experiment conducted in 2020 was to learn whether AI- and human-composed messages can be distinguished by these legislators choosing not to respond to machine-generated letters.

Out of six issues, the response rate for three issues was statistically almost the same for both machine- and human-written letters. As for the rest three, the AI-generated letters recorded a 2 per cent lower response rate.

Based on the result, the researchers concluded that legislators cannot perceive differences between AI- and human-generated content. This could lead to skewing directions of political discourse when a malicious actor makes unique messages and influences “legislators’ perceptions of which issues are most important to their constituents as well as how constituents feel about any given issue.”

Most recently, fake AI creations of the candidates running for the 2024 United States presidential elections have been at the centre of discussion for spreading misinformation online.

These productions included Republican candidate Donald Trump being surrounded and supported by groups of black people, which reportedly was shared among many African Americans who believe the photo to be true.

Another example was a robocall dialled to residents of New Hampshire saying that they would lose their right to vote if they cast a ballot in the primary of that state, in a voice that mimics incumbent President Joe Biden. It was later found that the call was made by a political consultant who wanted to push for AI-related rules.

Understanding the potential risks of affecting politics, some AI platforms are introducing solutions to curb malicious uses. For example, the image generator platform Midjourney started blocking users from making fake images of Donald Trump and Joe Biden in March 2024.

Efforts to Regulate Fake Productions and Prevent Real Problems

Ian Bremmer, a professor at Stanford University, wrote in his essay for Foreign Affairs last year that AI can affect the structure of current world powers. In democratic countries, AI widely collects personal information without consent and utilises it for commercial purposes, and it can transform into a tool to suppress freedom of speech and expression by authoritative regimes, he said.

Professor Bremmer added that AI regulations are necessary to maintain the justification of social consensus in a state that protects democracy and provides public resources.

Some experts emphasise the role of legacy media in the ocean of AI-generated misinformation. Legacy media should maintain a gatekeeping system to crosscheck facts or newly obtained information from various sources while following journalism ethics.

Imran Ahmed, the CEO and founder of the nonprofit Center for Countering Digital Hate, says that social media platforms and AI companies need to increase their efforts to shield users from the negative impacts of AI-generated content.

Referring to the picture of Donald Trump with black people, he was quoted as saying by AP: “If a picture is worth a thousand words, then these dangerously susceptible image generators, coupled with the dismal content moderation efforts of mainstream social media, represent as powerful a tool for bad actors to mislead voters as we’ve ever seen.”

Many countries in the West have already been taking legislative actions against the spread of AI-powered productions.

In May 2024, the European Union legislated the Artificial Intelligence Act, the first international regulation concerning the uses of AI. The act asks providers and users of AI systems to maintain transparent, clear labelling that the content is AI-generated. The act defines four different levels of risks, ranging from minimal risk to limited risk to high risk to the highest, unacceptable risk. Most AI-generated content falls under the limited risk category, whose risk is associated with providers and users not identifying the AI sources.

A company violating this law of limited risk can be penalised with a fine of either 15 million euros or 3 per cent of the global annual turnover in the previous financial year.

Gen AI

In the U.S., there is no federal law to regulate gen AI, but several states have introduced deepfake-related rules for election campaigns and sexual content. For example, the state of Texas revised its election law in September 2019, banning deepfake videos of candidates published or distributed within 30 days of an election.

Experts say that Asian countries should also strengthen their digital literacy education for the public and penalties for malicious uses of gen AI.

Lee Dae-sung, a professor at Gyeonggi University, emphasised that the foremost concern in regulating advanced AI-generated content, such as deepfakes, is the establishment of a legal framework to mitigate malicious applications of AI technologies. He noted that while many companies are introducing relevant legislation, there remain areas where the current legal system is inadequate.

“Especially concerning AI-generated content of a sexual or political nature, we require an institutional strategy to identify and punish (the distributors and providers) and safeguard the victims,” he stated.