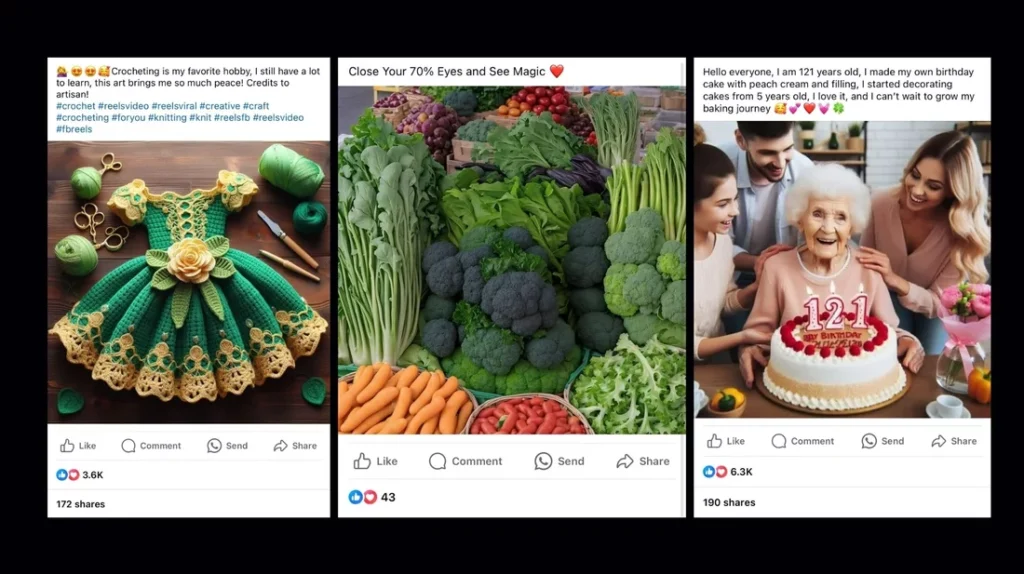

The rise of AI-generated content on social media platforms like Facebook has sparked significant concern as users increasingly report bizarre and unsettling posts filling their feeds. These images, often strange composites like depictions of Jesus made from vegetables, have left users feeling that their online spaces have become “bizarre” and “creepy.” However, the implications of this AI-driven phenomenon extend far beyond mere discomfort.

The surge of AI-generated content

Recent investigations, including a study by researchers from Georgetown and Stanford, have highlighted a disturbing trend: AI-generated images and posts are being systematically pushed to users’ feeds, often without their knowledge. These posts, which range from distorted pictures of religious figures to emotionally exploitative depictions of disabled individuals, are not just oddities—they are often part of broader scams and spam strategies designed to manipulate and profit from unsuspecting users.

Platforms like Facebook have been found to actively recommend these AI-generated posts, creating a feedback loop that amplifies their reach and engagement. For example, a post featuring an AI-generated image of a log cabin might not only go viral but also direct users to external sites designed to harvest ad revenue or even deceive them into purchasing non-existent products.

The motivation behind AI spam

The creators behind these spam pages are primarily driven by profit or clout rather than any ideological agenda. These pages often use AI-generated images to create visually sensational content and are designed to attract as many views, likes, and shares as possible. Some of these pages have amassed hundreds of thousands of followers, with individual posts racking up millions of interactions. A particularly concerning aspect is that many of these pages do not have a clear financial motivation—they seem to be accumulating an audience for purposes that remain unknown, which could potentially pivot toward more nefarious activities in the future.

Facebook’s role in promoting AI-generated content

Facebook’s algorithm plays a crucial role in this phenomenon. The platform’s recommendation system has increasingly prioritized content likely to generate engagement, which often means that spam images—due to their novelty and often unsettling nature—are pushed to users who do not even follow the pages posting them. This algorithmic boost increases the visibility of such content and contributes to the spread of misinformation, as many users are unaware that these images are artificially generated.

Moreover, Facebook’s own Creator Bonus Program, which rewards viral content, has inadvertently incentivized the production and dissemination of these AI-generated spam posts. Content creators from countries like India, Vietnam, and the Philippines have generated significant income by producing and posting these bizarre AI-generated images in large quantities, further fueling the spread of such content.

The need for greater transparency

As spam content becomes more pervasive, there is an urgent need for social media platforms to implement stricter transparency and labelling measures. Users need to be informed when they are viewing AI-generated content, and platforms like Facebook must take greater responsibility in moderating the spread of such content.

Additionally, public awareness and education are critical. Social media users need to be equipped with the tools and knowledge to discern spam images and understand the potential risks associated with interacting with such content. This could involve public service announcements or educational campaigns that teach users how to identify and critically assess AI-generated content.

The rise of AI-generated spam on social media is not just an annoyance; it represents a significant shift in how content is created and consumed online. As AI tools become more accessible and sophisticated, the potential for their misuse grows, with implications for everything from consumer fraud to the erosion of trust in digital content. Addressing this issue will require a concerted effort from social media platforms, policymakers, and the public to ensure that AI-generated content is used responsibly and transparently.