Amazon has released its new generation of Nova artificial intelligence (AI) models, which consist of five products. Three can be used for processing or understanding, and two for generating creative content, such as images, text, and video. The models can be accessed on Amazon Bedrock. The company unveiled these innovations during its annual “AWS re:Invent” event, where the latest work in generative AI, machine learning, and cloud environments was shared.

Among the presentation’s most notable features is the Nova foundational model ecosystem, which can process text, images, and videos and understand graphs and other multimedia content.

The brand also announced that it will present two new Amazon Nova models in 2025: a voice-to-voice conversion model that will understand natural language voice input, interpreting both verbal and non-verbal signals, and a native multimodal model capable of processing text, images, audio, and video, both as input and output.

Moreover, last month, Amazon also doubled down on its investment in Open AI’s competitor, Anthropic, investing another US$4 billion in the US-based AI business. The company is using Anthropic’s Claude to revamp its virtual assistant Alexa.

At the same time, the tech giant announced this month that it is building its own AI supercomputer, made with its in-house Trainium chips.

Amazon’s Nova’s different models

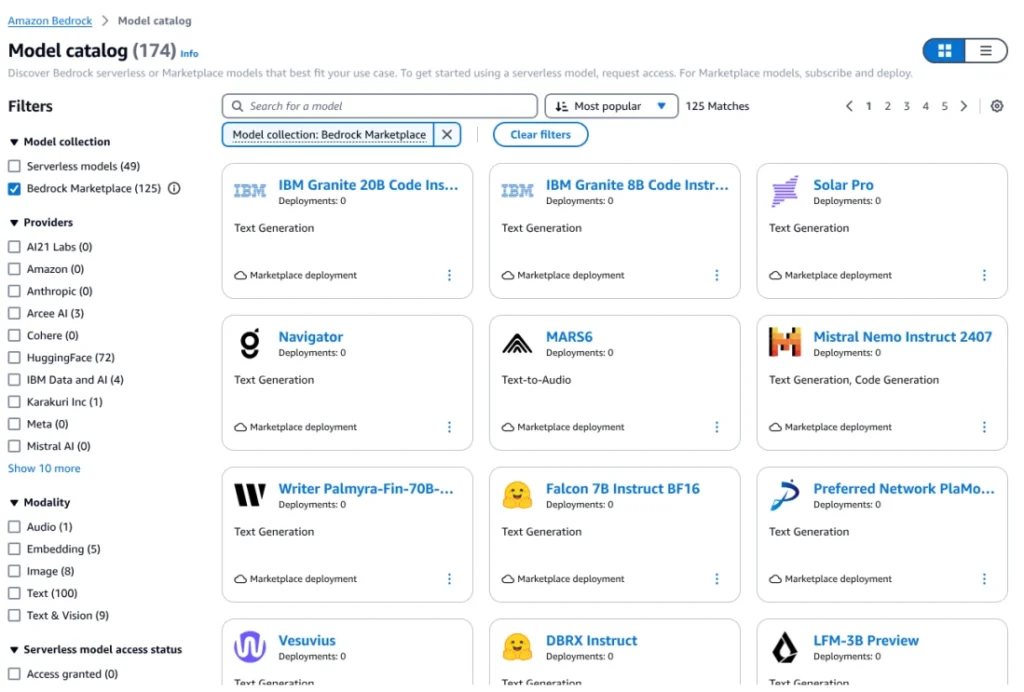

According to the tech company, Amazon Nova includes five models: three for understanding and two for content generation. All of these are already available on the platform for developers of generative AI applications with the basic Amazon Bedrock models.

Amazon Nova Micro is a text-only model that offers low-latency responses at a low cost, while Amazon Nova Lite is more agile in processing images, videos, and text inputs.

On the other hand, Amazon Nova Pro was presented as a high-capacity multimodal model that combines precision and speed for a wide range of tasks. Nova Canvas and Nova Reel are next-generation images and video-generation models, respectively.

Finally, the company has acknowledged that Nova Premier is, according to the business, the “most capable” multimodal model of all and is intended for complex reasoning tasks. Additionally, it will be used as a guide for developing custom models, a feature that will be available in the first quarter of 2025.

All models support a wide range of tasks in 200 languages, though they are optimised for English, German, Spanish, French, Italian, Korean, Arabic, Chinese, Russian, Hindi, Dutch, and Turkish.

Convincing intelligent content generation

The new models can also be adjusted based on a variety of preferences, allowing them to “learn what matters most to customers from their own data (including text, images, and videos), while Amazon Bedrock trains a custom model that will provide personalized responses,” the company notes.

It has also noted that the new models “are designed to help both internal and external developers ” and address challenges in application development, according to Rohit Prasad, Senior VP of Amazon’s General Artificial Intelligence.

He also said they aim to provide “convincing intelligence and content generation” and offer “significant progress” in latency, cost-efficiency, and customization.

The company underscores that Amazon Nova Micro, Amazon Nova Lite, and Amazon Nova Pro are at least 75% more affordable than the highest-performing models in their respective intelligence classes on Amazon Bedrock.